Table of Contents

Where platform policies meet payment processors, and everyone’s a critic until they need the First Amendment

This is part 3 of our digital safety trilogy. In part 1, we covered practical digital parenting strategies that actually work—from router-level filtering you can configure yourself to having real conversations with your kids about online risks. Part 2 tackled the false choice between privacy and protection, showing how surveillance measures like facial scans and government ID requirements create more problems than they solve while failing to protect children effectively.

Today we’re examining the final piece of the puzzle: how corporate content moderation has become a bigger threat to free expression than government censorship, and why the current system fails both creators and communities.

Well, well, well, my digital darlings! We’ve reached the final installment of our safety trilogy, and honey, we saved the spiciest topic for last. Free speech and content moderation—where everyone’s a constitutional scholar until their own content gets flagged.

Recent controversies have highlighted an uncomfortable truth: We’ve created a system where corporations wield more power over speech than governments, and somehow that’s supposed to be “freedom.” From Roblox facing lawsuits over platform safety failures while banning investigators trying to catch predators, to payment processors freezing legitimate creators’ earnings based on content ratings, to activist campaigns targeting marginalized creators while missing actual harmful content—the current system is broken in spectacular fashion.

Time for some TechBear truth-telling about who really controls what you see, say, and sell online.

This Week’s Correspondence: When Censorship Gets Creative

Dear TechBear, I’m a small indie game developer, and I spent two years creating a visual novel about a survivor of sexual assault who rebuilds their life and starts a support group to help other survivors heal. It’s tasteful, focuses on recovery and empowerment, and could genuinely help people—but payment processors keep refusing to handle transactions because the game description mentions “sexual assault”, “self-harm” and “substance abuse.” Meanwhile, I see violent games and actual gambling apps with no problems getting paid. How is this legal? How is this fair? – Censored Creator in California

Darling, welcome to the wild west of economic censorship! You’ve discovered that the First Amendment protects you from government censorship but says absolutely nothing about corporate gatekeepers deciding your empowering story about healing is too “risky” for their brand.

Your situation perfectly illustrates how payment processors have become de facto speech regulators, and their risk-averse algorithms can’t distinguish between content that exploits trauma and content that helps people heal from it. It’s like having a bouncer who kicks out both predators AND the counselors trying to help their victims.

Dear TechBear, I run a Discord server for neurodivergent individuals where we share coping strategies, job hunting tips, and support each other through daily challenges. We welcome anyone with autism, ADHD, or similar conditions regardless of age. Lately, we’ve been getting reported for “suspicious adult behavior” because some members are adults and others are teens, even though we’re just trying to create community for people who understand each other’s experiences. Are neurodivergent adults not allowed to mentor younger community members or share our lived experiences? – Misunderstood Moderator

Oh honey, you’ve stumbled into the intersection of ableism and moral panic! The assumption that neurodivergent adults seeking community with people who share their experiences must be predatory is both discriminatory and dangerous. Many neurodivergent individuals find peer mentorship and shared experience invaluable for developing coping strategies and professional skills.

The real problem isn’t your community—it’s automated systems and moral panic policies that can’t distinguish between genuine peer support and predatory behavior. Neurodivergent individuals deserve intergenerational community spaces where shared experiences can be discussed safely, without being treated as criminals for mentoring younger people facing similar challenges.

The Real-World Crisis: How We Got Here

The current content moderation crisis isn’t theoretical—it’s actively harming both child safety AND creator freedom through systemic failures that prioritize corporate liability over actual protection.

The Roblox Contradiction

Roblox is simultaneously facing lawsuits for failing to protect children from predators while banning Schlep, a former user who was sexually harassed as a minor by a Roblox developer. Schlep now runs anti-predator investigations, creating decoy accounts that predators approach first, then turning evidence over to law enforcement. His team claims credit for six arrests.

Roblox banned him for “vigilante behavior” and “deceptive practices”—while allowing experiences like “Bathroom Simulator” and “Escape from Epstein Island” to remain on their platform. Children searching for innocent content are shown 18+ results without intentionally looking for them.

This perfectly illustrates the perverse incentives: Platforms prioritize brand protection over actual safety, banning people who help law enforcement while failing to address the underlying problems.

The Collective Shout Campaign

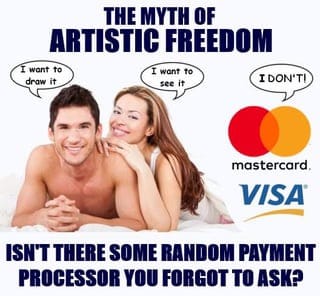

Australian activist group Collective Shout pressured Mastercard and Visa to force Steam and Itch.io to remove “exploitative and abusive content.” While the payment companies deny direct involvement, network rules about “brand harm” gave them leverage.

The result: Steam and Itch delisted all mature content, only restoring some after reviews. The creators hit hardest were marginalized groups—LGBTQ+, women, people of color, disabled creators—whose stories contained filtered keywords even when the content was educational or empowering.

Meanwhile, actually exploitative content that avoids trigger words continues operating without interference.

The Cross-Border Freeze

An overseas creator working for a British company reportedly had 80,000 GBP frozen by PayPal when they tried to transfer earnings from a game that was legal in every jurisdiction where it was sold. While this case is based on anonymous reporting in developer communities, it illustrates the broader pattern of payment processor restrictions affecting international creators.

[Note: The specific 80K case is anecdotal, reported through Reddit’s r/LegalHelpUK community, though similar payment processor restrictions on 18+ content are widely documented by developers.]

According to the reported account, PayPal demanded details about the specific game, then “de-banked” the creator upon discovering its 18+ rating. When the employer tried to receive the funds to write a check, PayPal blocked that transaction too. The creator’s livelihood was threatened not by any government or legal action, but by a US payment processor applying its own moral standards to legal content created in other countries.

This raises fundamental questions about economic sovereignty: Can a US company legally freeze a German citizen’s earnings for creating content that’s legal in Germany, Britain, and every country where it’s sold?

Corporate vs. Government Censorship: Why Both Matter

The Constitutional Confusion

First Amendment Reality Check: The Constitution prevents GOVERNMENT censorship. Private companies can moderate content however they want on their platforms. But here’s where it gets complicated—when a handful of corporations control most online communication and commerce, their moderation decisions have speech implications that rival government censorship.

The Platform Power Problem

Market Concentration Means Speech Concentration:

- 3 companies control most social media (Meta, Google, Twitter/X)

- 2 companies handle most payment processing (Visa/Mastercard network)

- 1 company dominates online retail (Amazon)

- A handful of hosting services support most websites

When these few companies make policy changes, they effectively reshape the boundaries of acceptable speech for billions of people.

Economic Censorship: The Payment Processor Power Play

How It Works

Payment processors, terrified of chargebacks and brand damage, implement content restrictions that are often broader and less transparent than government regulations:

- Fear-based policies: Processors ban entire categories to avoid any risk

- Algorithmic enforcement: Keyword filtering catches legitimate content alongside problematic material

- No appeals process: Unlike government censorship, there’s often no recourse

- Cascading effects: One processor’s ban can trigger bans across the entire financial system

Real-World Impact

Remember our game developer? Her mental health advocacy game gets blocked while genuinely harmful content slides through because it doesn’t trigger keyword filters. The overseas creator loses 80K GBP not because their work was illegal anywhere, but because PayPal decided 18+ content was too risky for their brand.

The system optimizes for corporate risk management, not societal benefit or free expression.

Cross-Border Economic Control: When US Companies Override Foreign Laws

The Sovereignty Question

The PayPal case raises uncomfortable questions about economic imperialism: Should US payment processors be able to freeze earnings for content that’s legal in the creator’s country, their employer’s country, and every jurisdiction where it’s sold?

Current reality: US-based payment processors effectively export American moral standards globally, overriding local laws and democratic decisions about what content should be legal.

The Currency Weapon

PayPal’s restriction of Steam to only “big 6” currencies creates additional barriers:

- International creators face currency conversion obstacles

- Customers in smaller economies lose access to content

- Economic power concentrates further in major financial centers

- Local payment alternatives get squeezed out of global markets

Legal Frameworks Colliding

What happens when:

- German privacy laws conflict with US content policies?

- British creative works run afoul of American payment processor terms?

- Australian consumer protection laws clash with platform moderation?

Currently, the most restrictive jurisdiction wins, meaning everyone gets limited by the most censorious set of rules.

For a deeper dive on the legal analysis of the Collective Shout campaign and payment processor censorship, attorney Richard Hoeg provides thorough investigation of the legal frameworks and policy implications:

Hoeg’s analysis raises important questions about treating payment processors as “common carriers”—essential services that must serve legal transactions without content discrimination, similar to how phone companies can’t refuse service based on conversation topics.

The Scale Problem: When Human Judgment Meets Internet Speed

The Impossible Math of Internet Moderation

Daily Content Volume:

- Facebook: 4 billion posts

- YouTube: 500 hours of video uploaded per minute

- Twitter/X: 500 million tweets

- TikTok: 1 billion hours watched daily

Human Moderation Reality: Even with armies of moderators, human review of this volume is mathematically impossible. Hence the rise of algorithmic moderation—which creates its own set of problems.

When AI Makes Editorial Decisions

Algorithmic Moderation Problems:

- Context blindness: Can’t distinguish between discussing violence and promoting it

- Cultural bias: Trained on data that reflects societal prejudices

- Keyword stupidity: Flags breast cancer awareness as sexual content

- No nuance: Can’t understand sarcasm, historical context, or educational purpose

- Appeals nightmare: Hard to appeal to an algorithm that can’t explain its reasoning

Example Failures:

- Historical education about the Holocaust flagged as hate speech

- Breast cancer support groups tagged as adult content

- Mental health resources blocked for mentioning self-harm

- LGBTQ+ content flagged as “sexual” when it’s just about identity

- Indigenous names and cultural content marked as spam

Cultural and Contextual Challenges

One Platform, Many Cultures

The Global Moderation Problem: What’s acceptable varies dramatically across cultures, but platforms must create universal policies:

- Religious content: Sacred to some, offensive to others

- Political speech: Legal criticism in one country, treasonous in another

- Historical discussion: Educational context vs. glorification debates

- Satire and humor: Cultural differences in what’s funny vs. offensive

- Body positivity: Empowering to some, inappropriate to others

The American Export Problem

Cultural Imperialism Through Platform Policies: Most major platforms are US-based and export American cultural values and legal frameworks globally:

- American concepts of free speech applied worldwide

- US political sensitivities affecting global conversations

- American commercial interests shaping global discourse

- English-language bias in moderation systems

- Western cultural assumptions about appropriate content

Economic Pressures: The Advertiser-Friendly Content Trap

The “Brand Safety” Squeeze

How Advertiser Preferences Shape Speech:

- Demonetization: Content remains visible but creators can’t earn from it

- Shadow banning: Content gets reduced visibility without notification

- Algorithm suppression: Controversial topics get less reach

- Self-censorship: Creators modify content to maintain monetization

Topics That Get the Demonetization Treatment:

- Mental health discussions (too depressing for brands)

- Political content (too controversial)

- Historical topics (too heavy)

- LGBTQ+ content (still considered “not advertiser-friendly” by many)

- Disability advocacy (apparently too niche)

The Independent Creator Squeeze

Payment Processor Policies Disproportionately Affect:

- Independent creators with no corporate backing

- Marginalized communities whose existence is deemed “controversial”

- Educational content that addresses difficult topics

- Mental health resources using “triggering” keywords

- Historical and journalistic content covering sensitive subjects

Meanwhile, Major Corporations Get Different Treatment:

- Violent video games with corporate backing process payments fine

- Gambling apps operate with full payment support

- Major studios can monetize content that would get independent creators banned

- Corporate news outlets can cover topics that get independent journalists demonetized

Case Studies in Content Moderation Gone Wrong

The Roblox Safety Theater

Roblox’s treatment of Schlep illustrates how platforms prioritize liability management over actual safety:

The Context: Schlep was sexually harassed as a minor by a Roblox developer. He now runs anti-predator investigations using decoy accounts that predators approach first. His team works with law enforcement and claims credit for six arrests.

The Platform Response: Roblox banned him for “vigilante behavior” while allowing experiences like “Escape from Epstein Island” to remain on their platform.

The Real Issue: Platforms would rather ban people helping law enforcement than address systemic safety failures that might expose corporate liability.

The Visual Novel Dilemma Revisited

Our game developer’s situation illustrates multiple systemic problems:

- Algorithmic Censorship: Keywords like “sexual assault survivor,” “abuse recovery,” and “trauma support” trigger automatic flags regardless of whether the content is exploitative or empowering

- Economic Censorship: Payment processors become speech gatekeepers without democratic accountability

- Scale Problems: No human review of obviously legitimate content that helps trauma survivors

- Systemic Bias: Recovery and empowerment narratives get treated as “risky” content identical to exploitative material

Meanwhile, violent games with corporate backing process payments without issue, and actual exploitative content that avoids trigger keywords slips through filters.

The 80K Freeze: Economic Warfare Against Legal Content

The overseas creator’s experience demonstrates how payment processor policies override national sovereignty:

The Setup: Creator in Germany working for British company on content legal in both countries and every jurisdiction where sold.

The Trigger: PayPal’s automated systems flagged a large transaction, demanded details about the game’s content rating.

The Result: Complete “de-banking” based on 18+ rating, despite content being legal everywhere it operates.

The Precedent: US payment processors can now freeze earnings for content that violates no laws, answerable to no democratic process, with no meaningful appeals.

The Collective Shout Fallout

The Steam/Itch.io delisting campaign shows how activist pressure combines with payment processor risk aversion to create censorship that misses its stated targets:

The Campaign: Australian group pressures payment processors to force platforms to remove “exploitative” content.

The Result: Platforms remove all mature content to avoid risk, hitting educational and advocacy games alongside actual problematic material.

Who Got Hurt: Marginalized creators whose stories contained filtered keywords, even when content was empowering or educational.

Who Didn’t: Actually exploitative content that avoids trigger words continues operating normally.

The Neurodivergent Community Targeting

The Discord harassment shows how moral panic creates discriminatory enforcement:

The Community: Adults with autism and ADHD sharing coping strategies and job hunting tips in clearly marked 18+ spaces.

The Attack: Bad actors weaponize reporting systems, claiming any adult presence indicates predatory behavior.

The Assumption: Neurodivergent adults seeking community support are treated as inherently suspicious.

The Impact: Legitimate support communities can’t find stable platforms due to constant harassment and over-moderation.

Technical Solutions That Preserve Free Expression

Decentralized Alternatives

Community-Owned Platforms: Instead of corporate-controlled social media, communities can run their own discussion spaces with their own rules. Think of it like neighborhood associations versus corporate shopping malls—local control over local standards.

Multiple Platform Options: Rather than a few giant companies controlling all online communication, we need many smaller platforms that can specialize in different communities and standards.

User-Owned Data: Your posts, connections, and digital identity should belong to you, not to the platform. If a platform’s policies become problematic, you can take your data and relationships elsewhere rather than starting from scratch.

Improved Moderation Technologies

Context-Aware AI:

- Understanding intent behind content, not just keywords

- Cultural and linguistic sensitivity training

- Appeal systems with human oversight

- Transparency in algorithmic decisions

User-Controlled Filtering:

- Individuals set their own content preferences

- Community-based content rating systems

- Granular control over what content types to see

- Opt-in rather than imposed restrictions

The Economic Reality of Free Expression

Who Can Afford Free Speech?

Current System Advantages:

- Large corporations with legal teams to navigate complex policies

- Established creators with diverse revenue streams

- Mainstream content that doesn’t trigger algorithmic flags

- Well-funded operations that can absorb compliance costs

Current System Disadvantages:

- Independent creators dependent on platform monetization

- Marginalized communities discussing “controversial” topics

- Educational and advocacy organizations with limited resources

- International creators navigating US-centric platform policies

The Consolidation Problem

Market Concentration Effects: When a few companies control most online communication and commerce, their private decisions have public consequences comparable to government censorship.

Breaking the Bottlenecks:

- Antitrust enforcement to prevent communication monopolies

- Interoperability requirements so users aren’t locked into single platforms

- Common carrier obligations for essential communication services

- Public options for digital infrastructure

The Path Forward: Sustainable Digital Free Expression

What Actually Works

Multi-Stakeholder Governance: Platform policies should include input from users, civil society, experts, and public interest groups, not just corporate executives and advertisers.

Enforceable Standards with Appeal Rights: Some content restrictions are necessary, but they need transparent processes, human review options, and accountability mechanisms. Pure community self-regulation often fails, but so does pure corporate control.

Interoperability and Choice: Users should be able to move between platforms easily, forcing companies to compete on policies rather than network lock-in effects.

Economic Diversification: Reducing dependence on advertising revenue that incentivizes engagement-maximizing content regardless of social impact.

Technical Implementation

Portable Social Networks: Imagine if your contact list, posts, and social connections worked like email—you could change providers without losing your relationships or starting over.

Community-Run Discussion Spaces: Instead of relying on corporate platforms, communities can run their own forums with rules that make sense for their specific needs.

Privacy-First Communication Tools: End-to-end encrypted messaging and anonymous posting options that enable free expression without creating harassment opportunities.

Transparent Community Governance: Clear, community-controlled processes for content decisions with real appeals processes and accountability.

The TechBear Bottom Line

Free speech online isn’t about the right to say anything anywhere—it’s about ensuring diverse voices can participate in digital democracy without being silenced by corporate risk management or algorithmic bias.

The current system where payment processors can freeze 80K GBP for legal creative work, where platforms ban anti-predator investigators while hosting “Escape from Epstein Island,” where activist campaigns hit marginalized creators while missing actual harmful content—this isn’t sustainable democracy.

When a German creator working for a British company on content legal in both countries can have their earnings frozen by a US payment processor with no appeals process, we’re not talking about corporate free speech rights anymore. We’re talking about economic colonialism.

The real threat to free expression isn’t government censorship—it’s the consolidation of communication and commerce power in unaccountable corporate hands, combined with automated systems that can’t distinguish between harmful content and legitimate discourse about difficult topics.

The solution isn’t less moderation—it’s better moderation that’s transparent, accountable, and designed to enable rather than restrict legitimate expression.

The solution isn’t no platform governance—it’s governance systems that include the people affected by the policies, not just the corporations profiting from them.

The solution isn’t unlimited free speech—it’s systems sophisticated enough to distinguish between harmful content and legitimate discourse about difficult topics.

We can have platforms that are both safe and free, that protect children without surveilling everyone, that enable diverse communities without enabling harassment. But only if we stop accepting “it’s a private company” as the end of the conversation about digital rights.

Your voice matters. Your community matters. Your right to participate in digital democracy matters. And none of that should depend on whether an algorithm trained on biased data thinks your existence is “advertiser-friendly,” or whether a payment processor in a foreign country approves of your legal creative work.

For more information about these legislative and platform policy issues, or to share your views with your representatives, contact your local congressional offices or visit congress.gov to find your representatives’ contact information. For those not in the US, please follow your local legislative procedures.

Got questions about protecting free expression while maintaining safe online spaces? Email TechBear at therealtechbeardiva@gmail.com—put “Ask TechBear” in the subject line, because someone needs to explain why corporate censorship isn’t the price of digital safety.

Remember: Free speech isn’t just about grand political statements—it’s about the right of trauma survivors to share their healing stories, the right of neurodivergent adults to find community, the right of independent creators to make a living from legitimate content, and the right of all of us to participate in digital democracy without corporate gatekeepers deciding what we’re allowed to say, see, or sell.

That’s not just a free speech issue—it’s a human dignity issue. And TechBear doesn’t compromise on human dignity.

For business inquiries, quotes, or when you need someone to wrangle the digital chaos in your life, reach out to Jason at jason@gymnarctosstudiosllc.com. I’ll dust off my “Chief Everything Officer, Evil Mastermind, and Head Brain-Squirrel Herder” hats and give you the professional response you’re looking for.

TechBear and I post from our not-so-secret lair in Edina, MN, serving the Twin Cities Metro area and beyond.