When safety measures become surveillance infrastructure, and why your face scan won’t save your soul

Table of Contents

Buckle up, buttercups! We’re diving into the murky waters where “child safety” meets mass surveillance, and let me tell you—it’s more crowded down here than a Black Friday sale at MicroCenter.

This is part 2 of our 3-part series on online safety and digital rights. Last week, we covered practical digital parenting strategies that actually work—from router-level filtering you can set up yourself to having real conversations with your kids about online risks. Those tips focused on giving families the tools they need without requiring a computer science degree or government surveillance. Note: all links should open in new tabs.

Today we’re tackling the big question politicians and tech companies don’t want you to ask: do we really have to choose between privacy and protection? Spoiler alert: we don’t. Next week, we’ll explore how corporate censorship is reshaping free speech online in ways that might surprise you.

Privacy vs. Protection

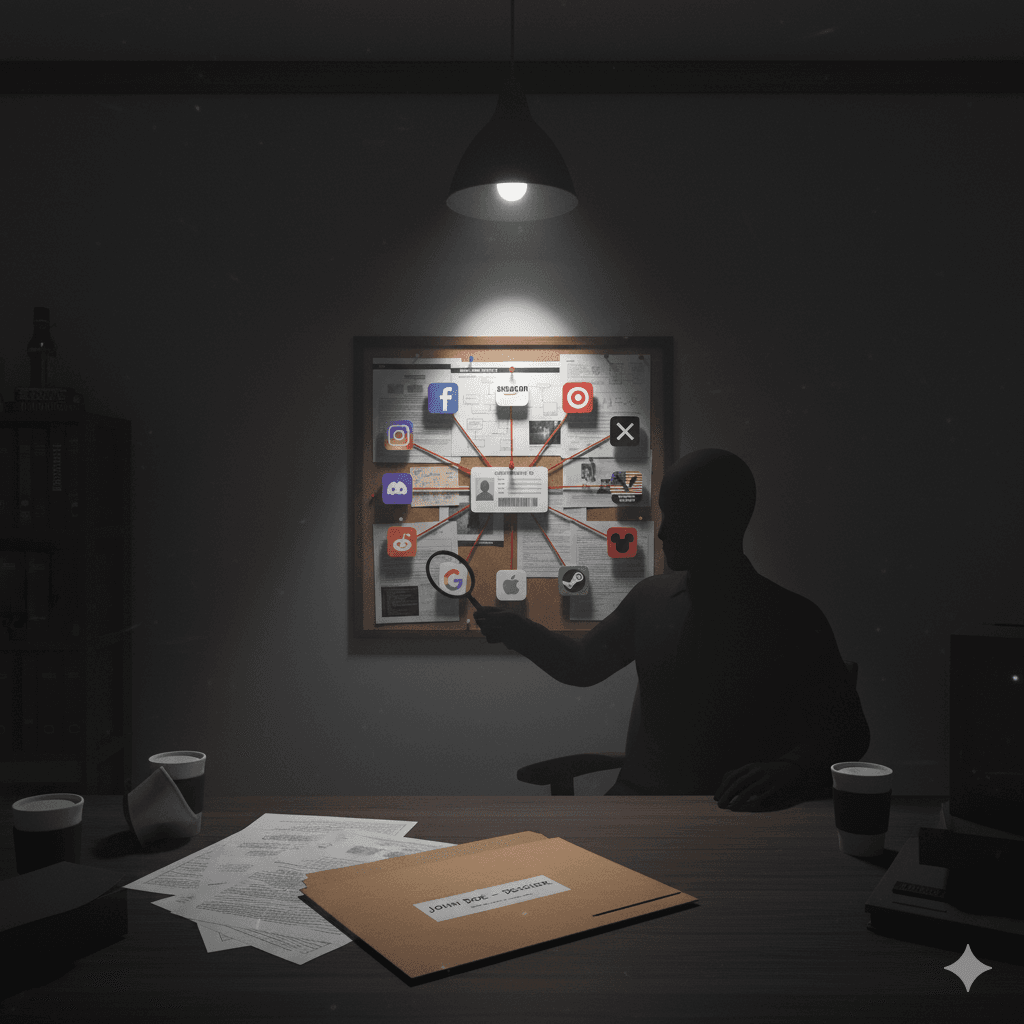

Between legislative proposals like SCREEN, KOSA, and IODA (and their internationally enacted counterparts) demanding facial scans and government ID uploads to third-party services, and companies creating databases that would make Big Brother weep with joy, we need to talk about what happens when “think of the children” becomes “monitor all the citizens.”

As Nora Lewin (played expertly by Diane Weist) wisely noted in Law and Order: “When you sacrifice privacy for security, you’re liable to lose both.” And honey, we’re about to lose both in spectacular fashion.

The Surveillance Sweet-Talk

Recent events have politicians and platforms scrambling to implement “safety” measures that sound protective but function like digital strip searches. Let’s examine what’s really happening when Roblox loses $12 billion in market cap and suddenly everyone’s demanding your driver’s license to play a block-building game.

This Week’s Reality Check: Letters from the Panopticon

Dear TechBear, I heard about new laws requiring age verification with facial scans and ID uploads. Finally, someone’s doing something about online safety! My teenager will hate it, but if it protects children, isn’t it worth giving up a little privacy? What could go wrong with everyone having digital IDs? – Security-Minded in Seattle

You’re essentially asking: “What could possibly go wrong with creating a centralized database containing facial scans, government IDs, and online behavior patterns of every internet user?” It’s like asking what could go wrong with storing everyone’s house keys in one location and posting the address on social media.

Dear TechBear, I work for a small tech company, and we’re panicking about compliance with new age verification requirements. We’re looking at systems that would cost us $200,000 annually and require storing sensitive user data we never wanted to handle. Is this really making anyone safer, or are we just creating bigger targets for hackers? – Stressed Startup Steve

Steve, you’ve hit the nail on the head with a sledgehammer. You’re being forced to become a high-value target storing exactly the kind of data that hackers dream about. Congratulations, you’re now in the identity theft enablement business!

This is regulatory compliance theater at its finest—expensive, invasive, and ultimately counterproductive to actual safety.

The Technical Truth About “Safety” Measures

The False Binary of Safety vs. Privacy

Legislators and platforms love presenting this as a simple choice: “Either we scan everyone’s face, or children get hurt.” But that’s like saying either we search every house without warrants, or crime goes unsolved.

The reality: Effective safety and strong privacy can coexist. In fact, privacy-respecting approaches often work BETTER than surveillance-heavy ones.

What Age Verification Really Creates

Proposed Systems Would:

- Create centralized databases of biometric data linked to online behavior

- Require third-party companies to handle government identification documents

- Generate detailed profiles of internet usage patterns

- Enable cross-platform tracking and surveillance

- Provide law enforcement with unprecedented monitoring capabilities

What They DON’T Do:

- Actually prevent determined minors from accessing content

- Address the root causes of online harm

- Protect against the most common online risks children face

- Create meaningful safety improvements proportionate to privacy invasion

Data Collection Creep: How Protection Becomes Surveillance

History shows us exactly what happens when surveillance infrastructure gets built “for the children”:

- Phase 1: “We just need this data to verify ages and protect kids.”

- Phase 2: “Well, we already have this data, so let’s use it for fraud prevention.”

- Phase 3: “This database would be really useful for law enforcement investigations.”

- Phase 4: “Why not expand this to prevent other types of harm?”

- Phase 5: “Congratulations, you now live in a surveillance state, but hey, think of the children!”

The Technical Architecture of Control

When you require identity verification for internet access, you’re not just creating a safety measure—you’re building infrastructure for:

Government Surveillance:

- Real-time monitoring of online activities

- Cross-platform user tracking and profiling

- Retrospective investigation of internet usage

- Predictive analysis of behavior patterns

Corporate Data Mining:

- Detailed demographic profiling for advertising

- Behavioral analysis for algorithmic manipulation

- Cross-service user identification and tracking

- Economic surveillance for pricing optimization

International Complications:

- Foreign government access to citizen data

- Diplomatic leverage through information control

- Economic espionage opportunities

- Authoritarian regime precedent-setting

Identity Verification: A Hacker’s Holiday

Creating Irresistible Targets

Imagine you’re a cybercriminal. What would be more valuable than a database containing:

- Facial biometric data

- Government ID scans

- Real names linked to online activities

- Age and demographic information

- Behavioral patterns and preferences

You’ve just created the mother lode of identity theft resources. It’s like putting a “Free Identity Theft Starter Kit” sign on every database.

But here’s the real nightmare: Biometric data creates permanent links between everything.

Even if companies keep their data in separate “siloes,” using the same face scan or government ID to verify your identity creates a master key that unlocks all of them. Here’s how it works:

The Third-Party Verification Trap:

- Company A (let’s say Facebook) hires ThirdParty Corp to handle age verification

- Company B (Target) uses the same ThirdParty Corp for their loyalty program verification

- Government Agency C also uses similar biometric matching for their databases

Now when ThirdParty Corp gets breached (and it will), the attacker doesn’t just get one company’s data—they get the key to link Facebook’s information with Target’s shopping habits AND the state’s criminal records database.

What This Creates:

- Your political views (from social media)

- Your shopping patterns (retail loyalty programs)

- Your financial transactions (credit card verification)

- Your legal history (government databases)

- Your content consumption (platform activity)

All linked together by your face scan or government ID.

The Blackmail Goldmine: Suddenly, a criminal knows you shop at certain stores, hold particular political beliefs, consume specific content, and have a complete financial profile. They can target you for harassment, discrimination, or blackmail with perfect precision and complete plausible deniability.

“Oh, we’re not targeting you for your political beliefs—we just happened to notice your shopping patterns suggest you’d be interested in this ‘opportunity.'”

The Security Nightmare

Single Point of Failure: Instead of distributed risk, you now have centralized catastrophic failure potential.

Attack Surface Multiplication: Every age verification provider becomes a high-value target.

Data Persistence: Unlike credit cards that can be cancelled, you can’t get a new face or new birthdate when your biometrics are compromised.

Cross-Service Compromise: One breach potentially compromises your identity across every platform requiring verification.

Real-World Precedent: Why This Always Goes Wrong

Remember when Equifax lost 147 million people’s personal information? T-Mobile was breached multiple times? Facebook had repeated data breaches affecting billions? Yahoo lost data on 3 billion accounts?

Now imagine those companies also had your facial biometrics and government ID scans. Sweet dreams!

Privacy-Preserving Alternatives That Actually Work

Zero-Knowledge Age Verification

How it works: Cryptographic proofs that confirm you meet age requirements without revealing your actual age, identity, or creating centralized databases.

Benefits:

- No personal data stored or transmitted

- Cannot be used for tracking or surveillance

- Preserves anonymity while confirming eligibility

- Reduces identity theft risk to near zero

Client-Side Content Filtering

The concept: Put filtering power in users’ hands rather than creating centralized chokepoints.

Implementation:

- Parents configure filtering on their own devices

- Content ratings provided through decentralized systems

- No central authority controls access

- Users maintain control over their privacy settings

Distributed Moderation Systems

Community-based safety: Let communities moderate themselves with transparent, accountable processes rather than top-down control systems.

Advantages:

- Context-sensitive moderation decisions

- Community ownership of safety standards

- Reduced single points of failure

- Preservation of diverse online communities

Economic Censorship: The Payment Processor Problem

How Financial Control Becomes Speech Control

Payment processors have become the de facto censors of the internet:

The Mechanism:

- Payment companies fear brand image damage

- They implement overly broad content restrictions

- Creators self-censor to ensure payment processing

- Legitimate content gets blocked alongside problematic material

Who Gets Hurt Most:

- Independent creators with limited platform options

- Marginalized communities whose experiences are deemed “controversial”

- Educational content addressing difficult but important topics

- Mental health resources that use triggering keywords to help people find support

The Algorithmic Censorship Problem

Keyword-based blocking creates absurd situations where:

- Breast cancer awareness gets flagged as sexual content

- Historical education about genocide gets blocked as violent content

- Support resources for sexual assault survivors are categorized as adult content

- Anti-bullying campaigns get blocked for mentioning bullying

- Self-harm prevention resources can’t use the words they need to help people find them

- Educational content about eating disorder recovery gets flagged for discussing harmful behaviors

Real-World Impact: Consider a visual novel featuring a protagonist who’s a survivor of sexual assault. The story focuses on their healing journey and how they build a support group to help other survivors. It’s clearly beneficial, empowering content that could help real people process trauma and find community.

But automated keyword filtering would likely block it because the game description includes phrases like “sexual assault survivor,” “abuse recovery,” or “trauma support group” – the very words people searching for help would use.

International Implications: A Global Game of Telephone

The Authoritarian Precedent

When democratic countries implement mass surveillance “for child safety,” authoritarian regimes take notes:

- “If the US requires identity verification for internet access, surely we can too.”

- “If facial scanning is acceptable for child protection in Europe, we’ll expand it for national security.”

- “If centralized content control works for America, it’ll work for us too.”

The Data Collection Reality: We’re Already Living in the Panopticon

The Voluntary Surveillance We’ve Already Accepted

Facebook/Meta knows:

- Your face (facial recognition from photos)

- Your social connections and family relationships

- Your political views and interests

- Your location patterns and travel history

- Your shopping behavior and brand preferences

Google knows:

- Your search history and browsing patterns

- Your email content and contacts

- Your location 24/7 (if location services enabled)

- Your voice patterns (Google Assistant)

- Your photo library and facial recognition data

Credit card companies know:

- Where you go and when

- What you buy and how much you spend

- Your lifestyle and consumption patterns

- Your financial stability and reliability

The Uncomfortable Truth: Privacy is Already Compromised

So why does government-mandated age verification matter if we’ve already given up our privacy?

Scale and Scope: While corporate surveillance is extensive, it’s fragmented. Government-mandated systems create unified, comprehensive profiles.

Voluntary vs. Mandatory: You can choose not to use Facebook. You can’t choose not to participate in government-required verification systems.

Cross-Platform Integration: Current corporate surveillance is siloed. Age verification systems would legally require linking previously separate online activities.

The “Alternative” That Makes Everything Worse

“Don’t worry about facial scans and ID uploads – you can just use a credit card for age verification!”

This supposed privacy-friendly alternative actually creates a perfect storm. We’re already seeing this in action: Valve UK now requires credit card validation to access 18+ content, following British versions of proposed US laws. The theory sounds reasonable—banks can’t issue credit cards to minors, so having a card on file proves you’re over 18 without building tracking databases.

But here’s the reality check: It doesn’t actually work, and it creates new problems.

What Credit Card Verification Actually Does:

Payment Processor Censorship Amplification:

- Credit card verification gives payment processors direct control over content access

- Expands their content gatekeeping power from commerce to basic internet access

- Creates the Collective Shout scenario on steroids—now they control access, not just payment

Data Linking Goldmine:

- Ties real financial identity to online activity across platforms

- Creates unified tracking across previously separate services

- Links online behavior to offline financial patterns

Economic Exclusion:

- Excludes people without credit cards (young adults, immigrants, low-income individuals)

- Creates financial barriers to internet access

- Enables discriminatory access based on credit history

And It Doesn’t Even Work:

- Parents share Steam accounts with kids (the credit card proves nothing about who’s actually using it)

- Children find other ways to access content (the “forbidden fruit” principle in action)

- Determined minors can still bypass restrictions

You get neither privacy protection NOR content access freedom—instead you get financial surveillance PLUS payment processor content control PLUS it doesn’t actually protect children.

Threat Modeling: What Are We Actually Protecting Against?

High-Probability, Low-Impact vs. Low-Probability, High-Impact

Current “Safety” Measures Focus On:

- Stranger danger (statistically rare)

- Explicit content exposure (manageable through other means)

- Platform-specific harms (solvable through better platform policies)

While Ignoring More Common Issues:

- Cyberbullying and social cruelty

- Algorithmic manipulation and addiction

- Data exploitation by legitimate companies

- Mental health impacts of social media

And Creating New Risks:

- Mass surveillance infrastructure

- Identity theft vulnerability

- Authoritarian precedent-setting

- Economic censorship expansion

The TechBear Technical Alternative

What Actually Works for Safety

Education Over Elimination: Teach people to navigate risks rather than trying to eliminate all risks.

Transparency Over Surveillance: Make platform policies and algorithms transparent rather than making users transparent to platforms.

User Control Over Central Control: Give individuals and families tools to manage their own safety rather than mandating one-size-fits-all solutions.

Community Moderation Over Corporate Censorship: Enable communities to set and enforce their own standards rather than imposing universal restrictions.

A Practical Implementation

For Platforms:

- Clear, consistent community guidelines

- Transparent moderation processes with appeals

- User-controlled filtering and blocking tools

- Regular transparency reports on content decisions

For Families:

- Education about online risks and navigation

- Communication channels for reporting problems

- Age-appropriate digital literacy training

- Tools for family-level content management

For Governments:

- Focus on prosecuting actual crimes rather than preventive surveillance

- Ensure corporate accountability for data handling

- Protect user privacy rights in legislation

- Resist the urge to implement technological solutions to social problems

The Economic Reality Check

What This Actually Costs

Implementation Costs:

- Small businesses: $50,000-$500,000 annually for compliance

- Medium enterprises: $500,000-$2,000,000 annually

- Large platforms: $10,000,000+ annually

Who Bears the Cost:

- Small creators priced out of platforms

- Independent businesses unable to compete

- Innovation stifled by compliance overhead

- Users who ultimately pay through higher prices and fewer choices

The Innovation Tax

Every dollar spent on surveillance infrastructure is a dollar not spent on:

- Better user experience design

- Improved content moderation algorithms

- Mental health support features

- Educational content development

- Accessibility improvements

The Path Forward: Practical Solutions That Actually Work

Simple, Proven Approaches

Better Parental Controls (That Parents Actually Control):

- Router-level filtering that families configure themselves

- Device-specific restrictions parents can understand and manage

- Age-appropriate account settings that don’t require government ID

- Clear, simple tools that work without technical expertise

Platform Accountability Without Surveillance:

- Transparent community guidelines with clear examples

- Human moderators for context-sensitive decisions

- Appeals processes that actually work

- Regular public reports on what content gets removed and why

Education That Makes Sense:

- Digital literacy programs that teach critical thinking

- Age-appropriate discussions about online risks

- Teaching kids how to report problems and seek help

- Resources for parents who didn’t grow up with the internet

What We Can Build On

User-Controlled Systems: Instead of asking “How do we verify everyone’s age?” ask “How do we give families the tools they need?”

Community Standards: Let communities decide what’s appropriate for them, rather than imposing universal restrictions that don’t fit anyone’s actual needs.

Incremental Improvements: Make existing systems work better instead of building entirely new surveillance infrastructure.

The TechBear Bottom Line

The choice between privacy and safety is a false binary designed to make you accept surveillance you’d otherwise reject. Real security comes from strong privacy protections, not from eliminating privacy in the name of protection.

When Roblox faced lawsuits over safety failures and documentaries exposing how platforms prioritize profits over child protection, the response wasn’t “implement better safety measures.” It was “implement more surveillance.” Creating databases of facial scans and government IDs isn’t better safety—it’s just more invasive.

The technical reality: Privacy-preserving safety measures often work better than privacy-invasive ones because they:

- Don’t create high-value targets for attackers

- Maintain user trust and cooperation

- Scale without creating surveillance infrastructure

- Respect diverse community standards and values

As for “When you sacrifice privacy for security, you’re liable to lose both”—truer words were never spoken. These systems will inevitably be hacked, misused, expanded beyond their original purpose, and leveraged by bad actors you never intended to empower.

The real question isn’t whether you trust the current system with your data—it’s whether you trust every future system, administrator, hacker, and government that will eventually have access to it.

Because once you build surveillance infrastructure, it rarely gets dismantled. It just finds new purposes.

Coming up in part 3: We’ll explore how corporate censorship has become the new battleground for free speech, why payment processors have more power over online content than governments, and what this means for independent creators and small businesses trying to build something meaningful online.

For more information about these legislative proposals or to share your views with your representatives, contact your local congressional offices or visit congress.gov to find your representatives’ contact information. For those not in the US, please follow your local legislative procedures.

Got questions about protecting your privacy without sacrificing safety? Email TechBear at therealtechbeardiva@gmail.com—put “Ask TechBear” in the subject line and you might just get featured in a future column, complete with all the sweetness, sass, and good-natured roasting we know and love.

For business inquiries, quotes, or when you need someone to wrangle the digital chaos in your life, reach out to Jason at jason@gymnarctosstudiosllc.com. I’ll dust off my “Chief Everything Officer, Evil Mastermind, and Head Brain-Squirrel Herder” hats and give you the professional response you’re looking for.

Techbear and Jason post from our not-so-secret lair in Edina, MN, serving the Twin Cities Metro area and beyond.