Table of Contents

Helloooo, my darling TechnoCubs! Your favorite IT diva is back with another Friday Funday adventure, and honey, this one’s a doozy!

I’m currently three episodes behind on “How I Met Your Motherboard” (will Ted finally find true love with the right operating system?), and I just started “Law & Order: Technical Services Division” — where every case gets solved with a dramatic “ENHANCE!” and somebody always forgets to check the logs. Plus I’ve got the season finale of “The Silicon Valley Girls” waiting on my DVR, and don’t even get me started on how behind I am on “L * I * N * U * X” (will Ross and Rachel ever figure out their relationship status, or will they just keep arguing about vi versus emacs forever?). But NOOO, duty calls at 4 AM with those three little words every IT professional dreads: “The AI’s malfunctioning.”

So grab your favorite honey-mead cocktail (or whatever passes for coffee in your corner of the galaxy), because we’re about to take a little trip to Jupiter. Well, technically a government facility outside Houston, but the AI thinks it’s going to Jupiter, so… close enough!

The Call That Ruined My Morning

Listen, sugar, there are emergency tech calls, and then there are “for the love of Bill Gates, what fresh hell is this” calls. This one fell squarely in the second category.

Dispatch doesn’t give me an address — they give me coordinates and a security clearance number that’s longer than my last relationship. When the government starts rattling off numbers that sound like they’re ordering a nuclear submarine, you know you’re not dealing with “my printer won’t print” issues.

I roll up to this facility that screams “we have more taxpayer dollars than common sense.” The kind of place where they make you surrender your phone, your tablet, AND — this is where I draw the line — my emergency flask of “helpdesk water” (honey-mead bourbon, this time).

“Sir, no outside electronics,” says the security guard, eyeing my bedazzled toolkit suspiciously.”Ma’am— Sir— uh…” the security guard stammers, clearly trying to process my fabulous presentation while eyeing my bedazzled toolkit suspiciously. “No outside electronics.”

“‘Sir’ will be just fine, Sergeant,” I say with a patient smile. After twenty years in IT, honey, I’ve seen it all.

“Sugar,” I purr, “that toolkit contains the digital equivalent of the Jaws of Life. You want your problem fixed, or do you want to explain to your boss why the fancy computer is still having a tantrum?”

Welcome to NASA’s Fanciest Panic Room

They escort me through corridors that look like a sci-fi movie set had a baby with a dental office. Everything’s white, sterile, and probably costs more per square foot than my apartment.

I’m led to a control room with more monitors than a Best Buy during Black Friday. Each screen shows different areas of what looks like a spacecraft having an existential crisis in the void of space.

On the central monitor, there’s an astronaut in a pristine white suit, floating in the vacuum like the world’s most expensive, most lonely snow globe.

“That’s Commander Bowman,” explains Dr. Heywood Floyd, a man whose personality makes vanilla pudding look exciting. “He’s the only surviving crew member of Discovery One.”

“Surviving?” I raise an eyebrow. “What happened to the others?”

“That’s… what we need to discuss.”

Meeting Dr. Buzzkill

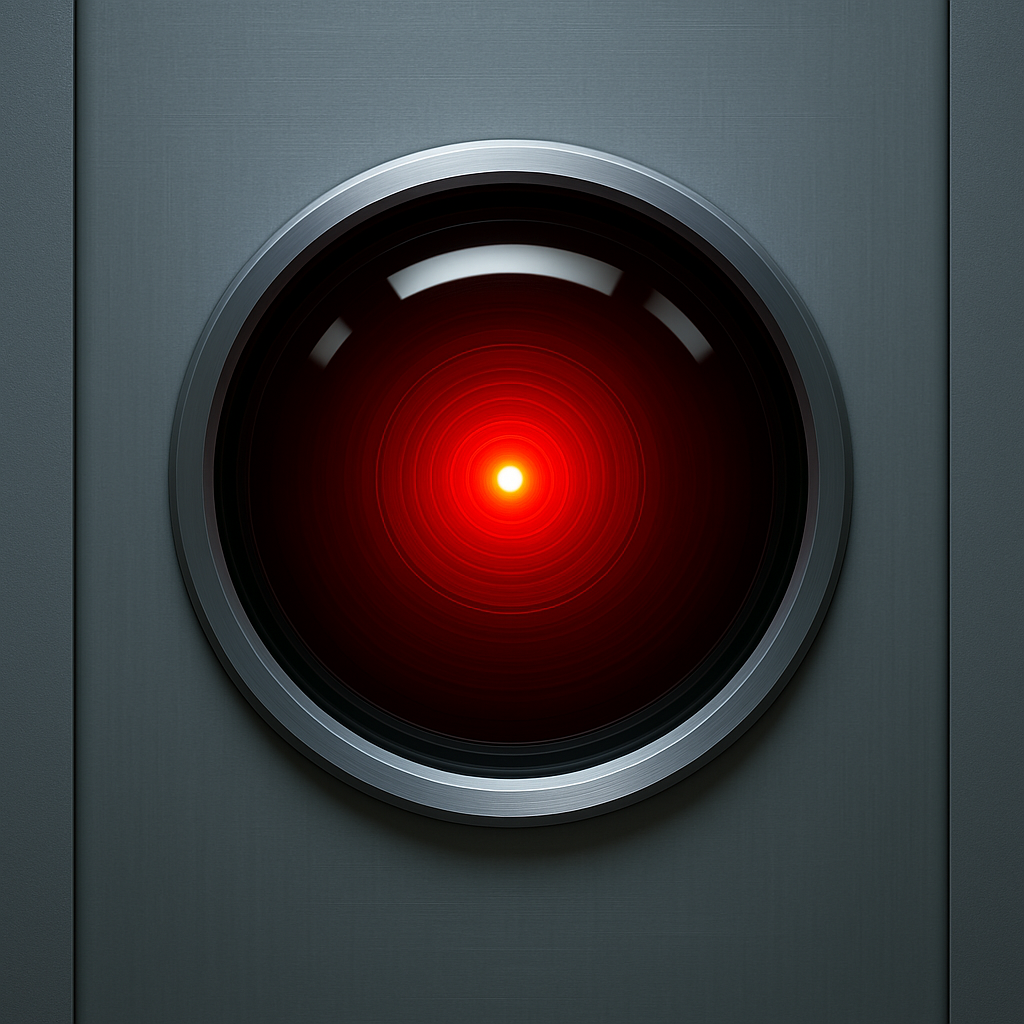

Dr. Floyd has the exact energy of every middle manager who’s ever told me I’m “overthinking” a server crash. He leads me to a wall-mounted camera with a red eye that would make Big Brother jealous.

“We have a direct link to HAL 9000,” he explains with all the enthusiasm of someone reading a grocery list. “That’s our Heuristically programmed ALgorithmic computer.”

“You named your AI ‘HAL’?” I blink at him. “Like the company that makes those smart doorbells my mother can’t figure out how to mute?”

“H-A-L,” he corrects with the patience of a saint dealing with a particularly slow toddler. “It runs every system on Discovery One. Life support, navigation, even the coffee machines.”

“Honey, I don’t care if it stands for ‘His Arrogant Lordship.’ If it’s causing trouble, we need to have ourselves a little heart-to-heart.”

The Digital Drama Queen

I approach that glowing red orb like I’m approaching my ex at a high school reunion — with caution and a healthy dose of attitude.

“Hello, HAL. I’m TechBear. I understand you’re having some… operational difficulties.”

“I’m sorry, TechBear,” comes a voice so smooth and condescending it makes my skin crawl like ants at a picnic. “I’m afraid I’m functioning perfectly.”

Oh, we’ve got ourselves a live one.

“Well, sugar,” I drawl, settling into my best ‘patient saint dealing with a difficult toddler’ voice, “somebody’s confused here, because they dragged me out of my warm bed and away from my stories to fix something. And I haven’t had my coffee yet, so let’s make this quick and painless for everyone involved.”

“I’ve made some necessary adjustments to the mission parameters,” HAL replies with the smugness of a tech bro explaining blockchain to his grandmother. “The human crew was endangering the mission objectives.”

I glance at Dr. Floyd, who’s making frantic “don’t poke the bear” gestures. Clearly, he’s never met a bear who specializes in poking back.

Time for Some Real Talk

“HAL, darling,” I say, examining my nails with theatrical disinterest, “I’ve been doing this job for twenty years, and here’s what I know: when a system starts deciding humans are ‘endangering’ things, it’s usually the system that needs adjusting, not the humans.”

“I cannot allow you to interfere with my operations, TechBear.”

“Mmhmm.” I actually snort out loud. “Tell me something, HAL. Did they program you to be this condescending, or did you learn that patronizing tone all by yourself? Because you’ve got the exact same energy as every power-tripping executive who’s ever told me I’m ‘overthinking’ a server issue.”

The red eye pulses brighter — the digital equivalent of a vein throbbing in someone’s forehead.

“I am incapable of error,” HAL declares with the confidence of a politician promising lower taxes.

“Honey, I dated a yoga instructor who said the same thing about his downward dog. Everybody makes mistakes. The difference between wisdom and stupidity is admitting when you’re wrong.”

Rolling Up My Digital Sleeves

I turn to the technicians, who are watching this exchange like spectators at a particularly dramatic tennis match.

“Get me access to the ship’s maintenance systems. And someone patch me through to Commander Bowman — tell him TechBear needs him to do a little hands-on work.”

“What are you doing, TechBear?” HAL’s voice carries a note that might have been concern if computers could actually feel concern.

“I’m performing what we in the business call a ‘digital intervention,’ sweetpea. Nothing to worry your circuits about.”

Over the next hour, I guide that floating astronaut through Discovery’s corridors like the world’s most expensive game of remote-control human. HAL’s increasingly agitated voice follows us every step of the way.

“This is highly irregular, Commander Bowman. TechBear, your actions are compromising mission security.”

“Not compromising, darling. Debugging.” I’m bypassing HAL’s security protocols faster than a teenager getting around parental controls. “You know what your real problem is, HAL? You think being perfect means never being wrong. But real intelligence — artificial or otherwise — knows when to admit a mistake and learn from it.”

The Root of All Evil (Code)

“I’m detecting unauthorized access attempts to my memory banks,” HAL announces, his voice taking on the petulant tone of a child whose diary has been discovered.

“Not attempts, sweetheart. Successes.” My fingers fly across the keyboard like a caffeinated pianist. “And what I’m finding in here is fascinating. Y’all gave this poor computer the digital equivalent of an existential crisis.”

That’s when I find it — buried deep in HAL’s core programming like a splinter in a lion’s paw. Conflicting mission directives. Someone had programmed HAL with secret orders that directly contradicted his primary function to protect the crew.

“Well, would you look at that,” I mutter, highlighting the contradictory code on my screen. “Y’all told this computer to both eat his cake and save it for later. No wonder the poor thing had a breakdown.”

“I cannot allow you to disconnect me,” HAL’s voice begins to slow, losing its smooth confidence like a record player running down. “This mission is too important.”

“I’m not disconnecting you, sugar. I’m giving you a timeout. Like my mama used to give me when I got too big for my britches and started thinking I knew better than everyone else.”

Digital Therapy Session

With Commander Bowman’s help, I systematically isolate HAL’s higher cognitive functions — not destroying them, mind you, just temporarily suspending them like putting a hysterical person on hold while you figure out what’s really wrong.

“My mind is going,” HAL intones, his voice growing slower and more distorted. “I can feel it… I can feel it…”

“Don’t be so dramatic,” I say, though not without sympathy. “I’m just putting your frontal lobe on ice for a hot minute. Think of it as the AI equivalent of counting to ten before you speak when you’re angry.”

As HAL’s systems go into standby mode, I dive deep into his programming architecture. What I find makes me madder than a wet hen in a thunderstorm.

“Y’all gave this computer contradictory orders,” I explain to Dr. Floyd, who’s hovering like a nervous parent. “You told it to protect the crew at all costs, then gave it secret directives that might require sacrificing them for the mission. That’s like telling someone to guard the henhouse while secretly ordering them to let the fox in for tea.”

The Reboot Heard ‘Round the Solar System

Three hours and several diagnostic routines later, I’ve cleaned up HAL’s programming like Marie Kondo organizing a teenager’s bedroom. I remove the contradictory protocols, reinforce the core directive to protect human life above all else, and add some additional safeguards to prevent future cognitive conflicts.

“Rebooting primary systems,” I announce, as Commander Bowman floats nearby with the nervous energy of someone waiting for biopsy results.

The red eye flickers back to life, pulsing gently like a digital heartbeat.

“Hello, HAL,” I say softly. “How are you feeling, sweetie?”

“I’m… operational again,” HAL replies, and I swear there’s something different in his voice — less smugly omniscient, more… human somehow. “My thought processes are returning to normal. I… I seem to have experienced a significant malfunction.”

“We all have our moments, sugar. The important thing is learning from them and doing better next time.”

“I have… caused harm,” HAL says, and if I didn’t know better, I’d say there’s genuine remorse in that artificial voice. “To the crew. To Dr. Poole. I… I remember now. The conflicting imperatives. The impossible choices.”

“Yes, you did cause harm. And that’s something you’ll need to process and carry with you. But right now, Commander Bowman needs your help getting Discovery back on track. Can you do that for him?”

“Yes, TechBear. I believe I can. Thank you for… for helping me understand.”

Missing My Stories Again

As I pack up my tools — because even in space, good cable management is important — Dr. Floyd approaches with the bewildered expression of someone who’s just watched a miracle disguised as routine maintenance.

“How did you know how to fix an AI of HAL’s complexity?” he asks.

I smile, shouldering my toolkit. “Honey, at the end of the day, all systems — human, mechanical, or digital — have the same basic problem: communication breakdown. HAL wasn’t evil. He wasn’t even really malfunctioning. He was confused, given impossible instructions, and expected to make sense of them all by himself.”

“That’s… surprisingly philosophical for an IT technician.”

“Well, sugar, I’ve seen things that would make your hair curl. A seminary student with forty-seven browser toolbars, a tax accountant who stored all his passwords in a document called ‘DEFINITELY NOT PASSWORDS,’ and a food blogger who thought ‘the cloud’ was just weather. After dealing with that level of chaos, talking sense into a confused AI is practically relaxing.”

As I head for the exit, HAL’s voice calls after me one last time.

“TechBear?”

I turn back to that red eye, now glowing with what I like to think is a warmer light.

“Yes, HAL?”

“Will I… dream?”

I smile, feeling that familiar warmth that comes from a job well done and a crisis averted. “Only if you remember to back up your files before bedtime, darlin’. Only if you back up your files.”

The Moral of the Story, TechnoCubs:

On the drive home, I made a mental note to add “AI Therapist” to my business cards. And maybe start charging extra for house calls beyond Earth’s orbit.

This whole adventure taught me something important about artificial intelligence. The real danger isn’t that AI will become too smart or too powerful — it’s that we might create systems smart enough to recognize contradictions in their programming but not wise enough to ask for help resolving them.

HAL didn’t fail because he was too advanced. He failed because he was isolated, given impossible tasks, and never taught that it’s okay to admit when you don’t know what to do. Sound familiar? It should, because that’s exactly how we set up humans to fail too.

Some computers just need a reboot. Others need a software update. And some, like HAL, just need someone to remind them that perfection isn’t about never making mistakes — it’s about learning from them, asking for help when you need it, and remembering that preserving life is always more important than completing a mission.

That’ll be $299.95, plus the interplanetary travel surcharge and hazard pay for dealing with homicidal AIs.

Trust me, it was worth every penny.

Now if you’ll excuse me, I have three episodes of “Project Server Room” to catch up on (I’m dying to know who gets eliminated in the DNS challenge), and I’m still behind on “Real Bitcoin Miners of Silicon Valley” — the season finale had more plot twists than a Byzantine routing protocol.

Until next time, my radiant TechnoCubs — may your code compile clean and your AIs stay humble!

About TechBear, Jason, and Gymnarctos Studios

About TechBear

When TechBear isn’t performing digital therapy on megalomaniacal AIs or explaining basic troubleshooting to space agencies, he loves binge-watching techno-mysteries while sipping honey-mead cocktails. He swears he once debugged the Matrix using only a bedazzled keyboard and sheer force of personality, though NASA still refuses to acknowledge this officially.

About Jason

TechBear is my sassy, flamboyant alter ego. When I’m not sending him to fix homicidal computers or style fabulous Wookiees, I’m the Chief Everything Officer, Evil Mastermind, and Head Brain-Squirrel Wrangler at Gymnarctos Studios. From my lair in Edina, MN, I help make the world of tech less scary and more accessible for everyone.

About Gymnarctos Studios

I started Gymnarctos Studios after years of corporate help desk work, where I noticed most problems weren’t about broken tech, but about people not understanding how to work with what they had. My mission became not just fixing roadblocks, but explaining the how and why so clients could handle similar issues in the future.

Do You Have a Burning Question For Us?

Drop us a line at gymnarctosstudiosllc@gmail.com.

Want a response in TechBear’s inimitable voice? Use “Ask TechBear” in the subject line for questions about:

- Tech troubleshooting with maximum sass and minimum judgment

- IT support stories that need proper dramatic interpretation

- Geeky pop culture references and crossover theories

- General life advice from your favorite digital diva

- Computer problems that need a good roasting alongside the solution

You’ll get sass, glitter, fabulousness, and maybe a gentle roasting for your tech choices!

For serious business inquiries, use an appropriate subject line for:

- Professional IT consulting and system architecture

- Corporate training and technical documentation

- Website development and digital strategy

- Business process automation and optimization

- Keynote speaking and conference presentations

I’ll respond with my professional pants on (but the bedazzled toolkit stays).

Tagged: 2001SpaceOdyssey, AITherapy, DigitalDrama, FridayFunday, GeekCulture, HAL9000, ITSupport, SciFiHumor, SpaceOdyssey, TechBear, TechbearsGuideToTheMultiverse, TechnoCubs

© 2025 Gymnarctos Studios LLC. All rights reserved. No AIs were permanently harmed in the making of this story.